A well-optimized robots.txtfile can make a significant contribution to improving the performance of your WordPress website and reducing server load.

What is robots.txt

One robots.txt is a simple text file that is stored on a website and tells search engines which pages they can and cannot crawl. Website operators use robots.txt to make certain areas of their site invisible to search engines, for example private or irrelevant content.

The file is usually located in the main directory of the website and contains "disallow" and "allow" instructions for search engine bots. It is a useful method to control the visibility of content and optimize the SEO performance of the website.

By excluding unnecessary content from being indexed by search engines, you direct their focus to the really important pages and content. A well thought-out robots.txtfile is helpful to use the crawl budget efficiently.

Why an optimized robots.txt for WordPress?

The robots.txtfile is used to control search engine bots and tell them which pages or directories on your website they should - or should not - crawl. This is especially relevant for large websites with many subpages, such as WooCommerce stores, because they generate many irrelevant pages such as the shopping cart or checkout.

The aim of an optimized robots.txt:

- Reduced server load through fewer unused crawler requests.

- Improved crawl budgetso that search engines focus on relevant pages.

- Preventing Duplicate Content (e.g. filter and sort options in WooCommerce).

Example of an optimized robots.txt for WooCommerce

Here is an example of an optimized robots.txtthat blocks unnecessary WooCommerce pages as well as certain filtering and sorting parameters. This configuration is ideal for stores that want to ensure that search engine bots focus on the product pages and not on the shopping cart or checkout.

Example of a Robots.txt

# Block WooCommerce assets User-agent: * Disallow: /cart/ Disallow: /shopping cart/ Disallow: /checkout/ Disallow: /checkout/ Disallow: /my-account/ Disallow: /my-account/ Disallow: /*?orderby= price Disallow: /*?orderby=rating Disallow: /*?orderby=date Disallow: /*?orderby=price-desc Disallow: /*?orderby=popularity Disallow: /*?filter Disallow: /*add-to-cart =* Disallow: /*?add_to_wishlist=* Disallow: /?add-to-compare=* Disallow: /*rating_filter=* Disallow: /*?wg-choose-original=* # Block Search assets User-agent: * Disallow : /search/ Disallow: *?s=* Disallow: *?p=* Disallow: *&p=* Disallow: *&preview=* Disallow: /search Disallow: *?wmc-currency=* Disallow: *?et-wishlist-page&add_to_wishlist=* Disallow: *&botiga_buy_now=1

Explanations of the entries

- Block WooCommerce-specific pages: Pages like

/cart/,/check out/,/my-account/are not relevant to search engines and should be blocked because they are intended for customers. - Exclude sorting and filtering parameters: WooCommerce pages that use sorting or filtering options such as

orderby=priceororderby=ratingchanged, often create duplicate content and can strain the crawl budget. - Block search pages and parameters: Internal search pages and URL parameters that represent specific search results or preview content should also be excluded. They usually do not provide any added value to search engines and could otherwise be indexed.

- Exclude currency and wishlist parameters: Pages that link to different currencies or wish lists are also irrelevant to search engines and can consume unnecessary crawling resources.

tips for implementation

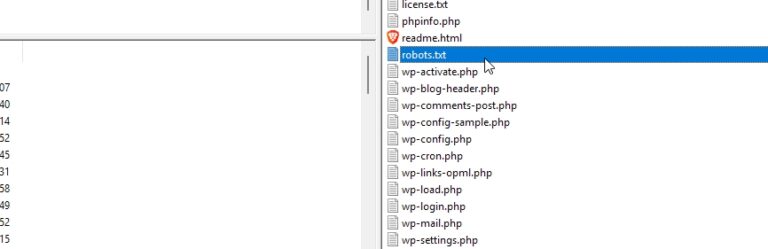

- Edit robots.txt in WordPress: The

robots.txtcan be managed through the WordPress admin area or through an SEO plugin like Yoast or Rank Math. Alternatively, it can be uploaded directly to the root directory of your website. - Be careful with disallow statements: Only block pages that do not add value for indexing. Excessive blocking could hide relevant content.

- Checking and Testing: Use this Google Search Console Toolto test how search engines perceive your

robots.txtinterpret. This helps to identify errors early on.

How to test the Robots.txt

Option 1: Use a tester, e.g. the one from SERanking

Option 2: Use Google

It looks like this:

- Open Google Search Console: Sign in and go to robots.txt tester.

- Load Robots.txt file: The tester shows you the current robots.txt file.

- Check URL: Enter the URL you want to test and click Test to see if it is blocked.

- Testing adjustments: Modify robots.txt if necessary and test the changes before going live.

Conclusion

With a well-structured robots.txtfile, you can significantly improve the SEO performance of your WordPress website by blocking irrelevant pages and optimizing the crawl budget. Such a file should be regularly reviewed and adapted to new content or page structures to ensure maximum efficiency.